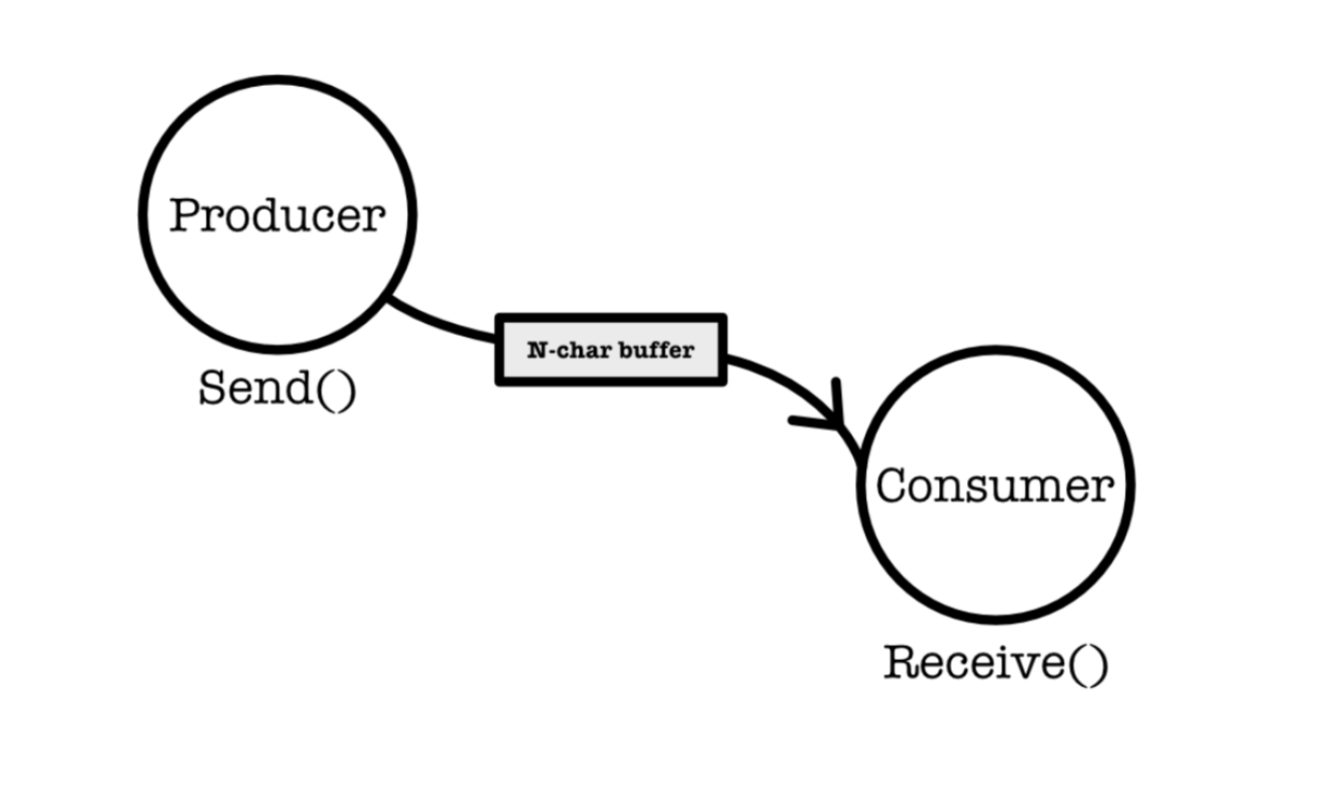

The Producer Consumer Problem

Consider the basic producer-consumer problem, where two asynchronous processes or threads are trying to write to and read from the same bounded N-character buffer concurrently.

Asynchronous and concurrent: both processes or threads make progress, but we cannot assume anything about their relative speed of execution.

Real Life Examples

In real life situations, a producer process produces information that is consumed by a consumer process. For example:

- A compiler (producer) producing assembly code that is consumed by an assembler (consumer).

- The assembler can also be a producer with respect to the loader: it produces object modules that are consumed by the loader.

- A web server produces (that is, provides) HTML files and images, which are consumed (that is, read) by the client web browser requesting the resource.

Precedence Constraints

The issue is that we require the following precedence constraints for these asynchronous processes:

- Producer cannot produce too much before consumer consumes

- Consumer cannot consume before producer produces

No Sync

To highlight what happens if we don’t synchronize both processes / threads, lets see a very simple producer program. Let counter and buffer of size N be a shared variable between the producer and consumer. counter keeps track of how many items there are in the buffer.

while (true) {

/* produce an item in next produced */

while (counter == BUFFERSIZE); /* do nothing */

buffer[in] = nextproduced;

in = (in + 1) % BUFFERSIZE;

counter++;

}

And the following very simple consumer program:

while (true) {

while (counter == 0); /* do nothing */

next consumed = buffer[out];

out = (out + 1) % BUFFERSIZE;

counter--;

/* consume the item in next consumed */

}

Each code is correct on its own, but incorrect when executed concurrently, meaning that without any proper synchronisation attempts, the precedence condition will be violated.

This is due to the presence of race condition.

The Race Condition

Assume buffer and counter are shared between the two processes / threads. The instructions counter ++ and counter -- are not implemented in a single clock cycle (it is not atomic).

Non-atomic ++ and –

counter++ may be implemented as follows in assembly language:

LDR(counter, R2)

ADDC(R2, 1, R2) || or SUBC for counter--

ST(R2, counter)

Race Condition Outcome 1

The execution between counter ++ and counter -- can therefore be interleaved. For example assuming the original value of counter is 4 and that there’s only 1 CPU, the following interleaved execution between the two may happen may happen:

LDR(counter, R2) | Producer executes, then interrupted, R2’s content:4

...IRQ on producer, save state, restore consumer

LDR(counter, R2) | Consumer executes, R2 contains 4

SUBC(R2, 1, R2) | R2 contains 3, then consumer is interrupted

...IRQ on consumer, save state, restore producer

ADDC(R2, 1, R2) | R2 contains 5

ST(R2, counter) | value 5 is stored at counter, then IRQ

...IRQ on producer, save state, restore consumer

ST(R2, counter) | value 3 is stored at counter

Therefore the final value of the counter is 3.

Race Condition Outcome 2

We can try another combination of interleaved execution:

LDR(counter, R2) | Producer executes, then interrupted R2’s content:4

...IRQ on producer, save state, restore consumer

LDR(counter, R2) | Consumer executes, R2 contains 4

SUBC(R2, 1, R2) | R2 contains 3, then consumer is interrupted

ST(R2, counter) | value 3 is stored at counter

...IRQ on consumer, save state, restore producer

ADDC(R2, 1, R2) | R2 contains 5

ST(R2, counter) | value 5 is stored at counter

Therefore in the case above, the final value of the counter is 5.

You may easily find that the value of the counter can be 4 as well through other combinations of interleaved execution of counter ++ and counter --.

Hence the value of the counter depends on the order of execution that is out of the user’s control (the order of execution depends on the kernel’s scheduling handler, unknown to the user).

In such a situation where several processes access and manipulate the same data concurrently and the outcome of the execution depends on the particular order in which the access takes place, is called a race condition.

A race condition is NOT desirable. We need to perform process synchronization and coordination among cooperating processes (or equivalently, threads cooperation) to avoid the race condition.

The Critical Section Problem

We define the regions in a program whereby atomicity must be guaranteed as the critical section.

Consider a system consisting of n processes {P0, P1,..., Pn−1}. Each process may have a segment of instructions, called a critical section (CS). The important feature of the system is that when one process is executing its critical section, no other process is allowed to execute its critical section.

In the consumer producer sample code above, the critical section in the producer’s code is the instruction counter++ while the critical section in the consumer’s code is counter-—.

In the critical section the (asynchronous) processes may be:

- Changing common variables,

- Updating a shared table,

- Writing to a common file, and so on.

To support the CS, we need to design a protocol that the processes can use to cooperate or synchronize.

It is a challenging problem to guarantee a critical section. Therefore, having a critical section in your program is a problem, and it requires complex synchronisation solutions.

There are two basic forms of synchronization:

- The mutual exclusion: No other processes can execute the critical section if there is already one process executing it.

- Condition synchronization: Synchronize the execution of a process in a CS based on certain conditions instead.

Requirements for CS Solution

A solution to guarantee a critical-section must satisfy the following three requirements:

- Mutual exclusion (mutex): No other processes can execute the critical section if there is already one process executing it (in the case of condition synchronization, this is adjusted accordingly)

- Progress: If there’s no process in the critical section, and some other processes wish to enter, we need to grant this permission and we cannot postpone the permission indefinitely.

- Bounded waiting: If process A has requested to enter the CS, there exists a bound on the number of times other processes are allowed to enter the CS before A. This implies that CS is also of a finite length, it cannot loop forever and will exit after a finite number of instructions.

Properties

The requirements above result in the following property to a CS solution:

- Safety property: no race condition

- Liveness property: a program with proper CS solution will not hang forever (because technically no progress IS mutex).

Solution Template

The solution template to a CS problem is as follows:

while(true){

[ENTRY SECTION]

CRITICAL SECTION ...

...

[EXIT SECTION]

REMAINDER SECTION ...

...

}

The protocol to approach a CS in general causes the process to:

- Request for permission to enter the section (entry section).

- Execute the critical section when the request is granted

- Exit the CS solution

- There may exist an remainder section

The rest of the program that is not part of the critical section is called the remainder section.